Answer

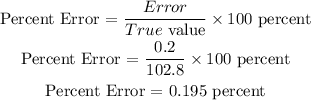

Percent Error = 0.195%

Step-by-step explanation

Percent error is given as

Error = | (Incorrect value) - (True value) |

Incorrect value = 103 miles per hour

True value = 102.8 miles per hour

Error = | (Incorrect value) - (True value) |

Error = | 103 - 102.8 |

Error = 0.2 miles per hour

Hope this Helps!!!