Answer:

The driver should travel at a Speed of 50 mph so that the average speed of the entire trip is 45 mph

Explanation:

Given:

Total distance=100 mi

Speed at which the driver travels the first 50 mi= 40 mph

To Find:

Speed at which the driver should travel so that the average speed of the entire trip is 45 mph=?

Solution:

Average speed:

The average speed of an object is the total distance travelled by the object divided by the elapsed time to cover that distance. It's a scalar quantity which means it is defined only by magnitude. A related concept, average velocity, is a vector quantity. A vector quantity is defined by magnitude and direction.

Let X be the distance at which driver should travel so that the average speed of the entire trip is 45 mph

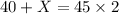

From The given data we get,

X=50