Answer:

B(A)100=B(B), the star A is 100 times fainter than star B.

Step-by-step explanation:

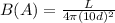

Brightness of the star is defined by the formula,

Here, L is the luminosity and d is the distance.

For star A, the distance is 10d. The brightness of star A.

For star B, the distance is d. The brightness of star B.

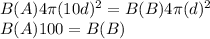

Now according to the question luminosity of two stars is equal.

Therefore,

So, star B is 100 times brighter than star A.

Therefore the star A is 100 times fainter than star B.