Answer:

14 miles

Step-by-step explanation:

The relation that will be used to solve this problem is:

which can be rewritten as:

Assume that:

Distance from your house to your friend's house is d

Time taken from your house to your friend's house is t₁

Time taken from your friends house to your house is t₂

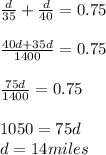

1- From your house to your friend's house:

Average rate = 35 miles/hour

Therefore:

2- From your friend's house to your house:

Average rate = 40 miles per hour

Therefore:

3- Round trip:

We know that the round trip took 45 minutes which is equivalent to 0.75 hours

This means that:

t₁ + t₂ = 0.75 hours

Hope this helps :)